Ask Ethan: Is There A Fundamental Reason Why E = Mc²?

Ask Ethan: Is There A Fundamental Reason Why E = mc²?

“Einstein’s equation is amazingly elegant. But is its simplicity real or only apparent? Does E = mc² derive directly from an inherent equivalence between any mass’s energy and the square of the speed of light (which seems like a marvelous coincidence)? Or does the equation only exist because its terms are defined in a (conveniently) particular way?”

Quite arguably, Einstein’s E = mc² is the most famous equation in the entire world. And yet, it isn’t obvious why it had to be this way! Could there have been some other speed besides the speed of light that converts mass to energy? Could there have been a multiplicative constant out in front besides “1” to give the right answer? No, no there couldn’t. If energy and momentum are conserved, and particles have the energies and momenta that they do, there’s no other option.

Come learn, at last, why E = mc², and why simply no other alternative will do.

More Posts from Ocrim1967 and Others

Using All of Our Senses in Space

Today, we and the National Science Foundation (NSF) announced the detection of light and a high-energy cosmic particle that both came from near a black hole billions of trillions of miles from Earth. This discovery is a big step forward in the field of multimessenger astronomy.

But wait — what is multimessenger astronomy? And why is it a big deal?

People learn about different objects through their senses: sight, touch, taste, hearing and smell. Similarly, multimessenger astronomy allows us to study the same astronomical object or event through a variety of “messengers,” which include light of all wavelengths, cosmic ray particles, gravitational waves, and neutrinos — speedy tiny particles that weigh almost nothing and rarely interact with anything. By receiving and combining different pieces of information from these different messengers, we can learn much more about these objects and events than we would from just one.

Lights, Detector, Action!

Much of what we know about the universe comes just from different wavelengths of light. We study the rotations of galaxies through radio waves and visible light, investigate the eating habits of black holes through X-rays and gamma rays, and peer into dusty star-forming regions through infrared light.

The Fermi Gamma-ray Space Telescope, which recently turned 10, studies the universe by detecting gamma rays — the highest-energy form of light. This allows us to investigate some of the most extreme objects in the universe.

Last fall, Fermi was involved in another multimessenger finding — the very first detection of light and gravitational waves from the same source, two merging neutron stars. In that instance, light and gravitational waves were the messengers that gave us a better understanding of the neutron stars and their explosive merger into a black hole.

Fermi has also advanced our understanding of blazars, which are galaxies with supermassive black holes at their centers. Black holes are famous for drawing material into them. But with blazars, some material near the black hole shoots outward in a pair of fast-moving jets. With blazars, one of those jets points directly at us!

Multimessenger Astronomy is Cool

Today’s announcement combines another pair of messengers. The IceCube Neutrino Observatory lies a mile under the ice in Antarctica and uses the ice itself to detect neutrinos. When IceCube caught a super-high-energy neutrino and traced its origin to a specific area of the sky, they alerted the astronomical community.

Fermi completes a scan of the entire sky about every three hours, monitoring thousands of blazars among all the bright gamma-ray sources it sees. For months it had observed a blazar producing more gamma rays than usual. Flaring is a common characteristic in blazars, so this did not attract special attention. But when the alert from IceCube came through about a neutrino coming from that same patch of sky, and the Fermi data were analyzed, this flare became a big deal!

IceCube, Fermi, and followup observations all link this neutrino to a blazar called TXS 0506+056. This event connects a neutrino to a supermassive black hole for the very first time.

Why is this such a big deal? And why haven’t we done it before? Detecting a neutrino is hard since it doesn’t interact easily with matter and can travel unaffected great distances through the universe. Neutrinos are passing through you right now and you can’t even feel a thing!

The neat thing about this discovery — and multimessenger astronomy in general — is how much more we can learn by combining observations. This blazar/neutrino connection, for example, tells us that it was protons being accelerated by the blazar’s jet. Our study of blazars, neutrinos, and other objects and events in the universe will continue with many more exciting multimessenger discoveries to come in the future.

Want to know more? Read the story HERE.

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com

CAPE CANAVERAL, Fla. – Space shuttle Discovery lifts off Launch Pad 39A in a billowing swirl of smoke and steam at NASA’s Kennedy Space Center in Florida, beginning its final flight, the STS-133 mission. Launch to the International Space Station was at 4:53 p.m. EST.

Credit: NASA

I love the sky colors ☀️ 🌤❤️

The funny cat stories

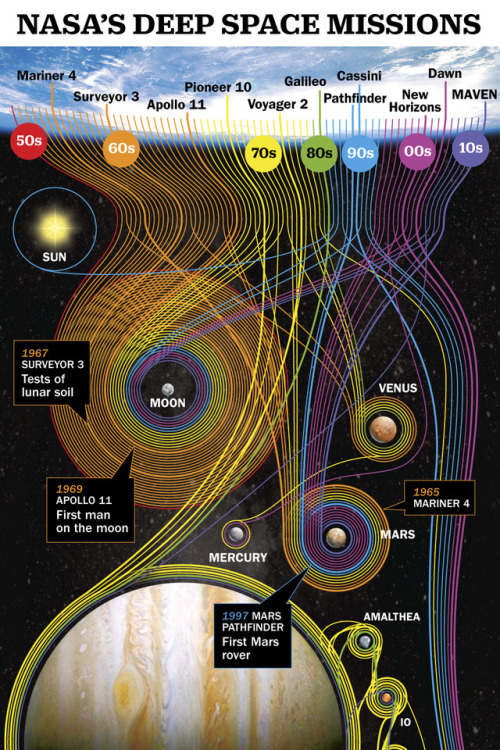

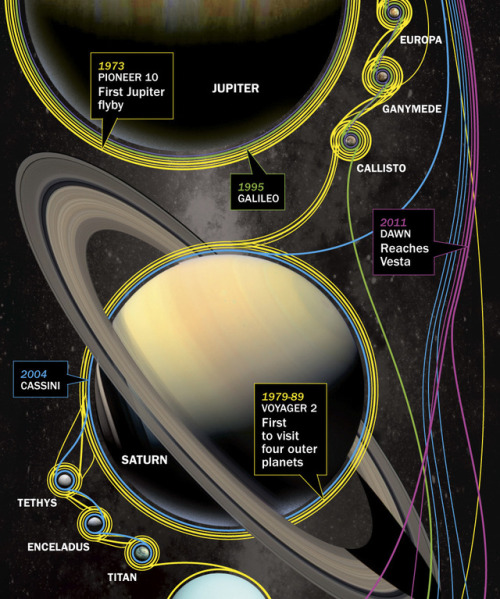

Deep Space Missions

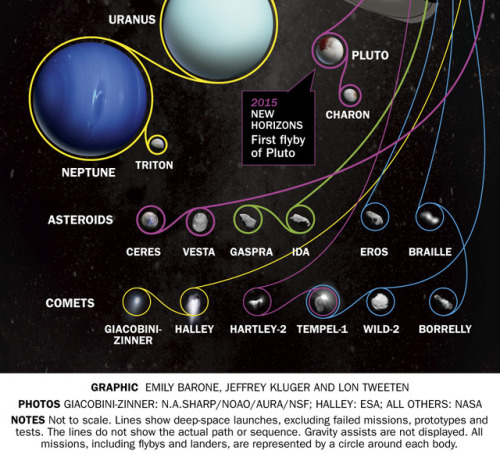

In 40 million years, Mars may have a ring (and one fewer moon)

Nothing lasts forever - especially Phobos, one of the two small moons orbiting Mars. The moonlet is spiraling closer and closer to the Red Planet on its way toward an inevitable collision with its host. But a new study suggests that pieces of Phobos will get a second life as a ring around the rocky planet.

A moon - or moonlet - in orbit around a planet has three possible destinies. If it is just the right distance from its host, it will stay in orbit indefinitely. If it’s beyond that point of equilibrium, it will slowly drift away. (This is the situation with the moon; as it gradually pulls away from Earth, its orbit is growing by about 1.5 inches per year.) And if a moon starts out on the too-close side, its orbit will keep shrinking until there is no distance left between it and its host planet. The Martian ring will last for at least 1 million years - and perhaps for as long as 100 million years, according to the study.

The rest of Phobos will probably remain intact, until it hits the Martian surface. But it won’t be a direct impact; instead, the moonlet’s remains will strike at an oblique angle, skipping along the surface like a smooth stone on a calm lake.

This has probably happened before - scientists believe a group of elliptical craters on the Martian surface were caused by a small moon that skidded to its demise. (If this were to happen on Earth, our planet’s greater mass would produce a crash as big as the one that wiped out the dinosaurs, the researchers noted as an aside.)

source

images: NASA/JPL, Tushar Mittal using Celestia 2001-2010, Celestia Development Team.

5 Ways the Moon Landing Changed Life on Earth

When Neil Armstrong took his first steps on the Moon 50 years ago, he famously said “that’s one small step for a man, one giant leap for mankind.” He was referring to the historic milestone of exploring beyond our own planet — but there’s also another way to think about that giant leap: the massive effort to develop technologies to safely reach, walk on the Moon and return home led to countless innovations that have improved life on Earth.

Armstrong took one small step on the lunar surface, but the Moon landing led to a giant leap forward in innovations for humanity.

Here are five examples of technology developed for the Apollo program that we’re still using today:

1. Food Safety Standards

As soon as we started planning to send astronauts into space, we faced the problem of what to feed them — and how to ensure the food was safe to eat. Can you imagine getting food poisoning on a spacecraft, hundreds of thousands of miles from home?

We teamed up with a familiar name in food production: the Pillsbury Company. The company soon realized that existing quality control methods were lacking. There was no way to be certain, without extensive testing that destroyed the sample, that the food was free of bacteria and toxins.

Pillsbury revamped its entire food-safety process, creating what became the Hazard Analysis and Critical Control Point system. Its aim was to prevent food safety problems from occurring, rather than catch them after the fact. They managed this by analyzing and controlling every link in the chain, from the raw materials to the processing equipment to the people handling the food.

Today, this is one of the space program’s most far-reaching spinoffs. Beyond keeping the astronaut food supply safe, the Hazard Analysis and Critical Point system has also been adopted around the world — and likely reduced the risk of bacteria and toxins in your local grocery store.

2. Digital Controls for Air and Spacecraft

The Apollo spacecraft was revolutionary for many reasons. Did you know it was the first vehicle to be controlled by a digital computer? Instead of pushrods and cables that pilots manually adjusted to manipulate the spacecraft, Apollo’s computer sent signals to actuators at the flick of a switch.

Besides being physically lighter and less cumbersome, the switch to a digital control system enabled storing large quantities of data and programming maneuvers with complex software.

Before Apollo, there were no digital computers to control airplanes either. Working together with the Navy and Draper Laboratory, we adapted the Apollo digital flight computer to work on airplanes. Today, whatever airline you might be flying, the pilot is controlling it digitally, based on the technology first developed for the flight to the Moon.

3. Earthquake-ready Shock Absorbers

A shock absorber descended from Apollo-era dampers and computers saves lives by stabilizing buildings during earthquakes.

Apollo’s Saturn V rockets had to stay connected to the fueling tubes on the launchpad up to the very last second. That presented a challenge: how to safely move those tubes out of the way once liftoff began. Given how fast they were moving, how could we ensure they wouldn’t bounce back and smash into the vehicle?

We contracted with Taylor Devices, Inc. to develop dampers to cushion the shock, forcing the company to push conventional shock isolation technology to the limit.

Shortly after, we went back to the company for a hydraulics-based high-speed computer. For that challenge, the company came up with fluidic dampers—filled with compressible fluid—that worked even better. We later applied the same technology on the Space Shuttle’s launchpad.

The company has since adapted these fluidic dampers for buildings and bridges to help them survive earthquakes. Today, they are successfully protecting structures in some of the most quake-prone areas of the world, including Tokyo, San Francisco and Taiwan.

4. Insulation for Space

We’ve all seen runners draped in silvery “space blankets” at the end of marathons, but did you know the material, called radiant barrier insulation, was actually created for space?

Temperatures outside of Earth’s atmosphere can fluctuate widely, from hundreds of degrees below to hundreds above zero. To better protect our astronauts, during the Apollo program we invented a new kind of effective, lightweight insulation.

We developed a method of coating mylar with a thin layer of vaporized metal particles. The resulting material had the look and weight of thin cellophane packaging, but was extremely reflective—and pound-for-pound, better than anything else available.

Today the material is still used to protect astronauts, as well as sensitive electronics, in nearly all of our missions. But it has also found countless uses on the ground, from space blankets for athletes to energy-saving insulation for buildings. It also protects essential components of MRI machines used in medicine and much, much more.

Image courtesy of the U.S. Marines

5. Healthcare Monitors

Patients in hospitals are hooked up to sensors that send important health data to the nurse’s station and beyond — which means when an alarm goes off, the right people come running to help.

This technology saves lives every day. But before it reached the ICU, it was invented for something even more extraordinary: sending health data from space down to Earth.

When the Apollo astronauts flew to the Moon, they were hooked up to a system of sensors that sent real-time information on their blood pressure, body temperature, heart rate and more to a team on the ground.

The system was developed for us by Spacelabs Healthcare, which quickly adapted it for hospital monitoring. The company now has telemetric monitoring equipment in nearly every hospital around the world, and it is expanding further, so at-risk patients and their doctors can keep track of their health even outside the hospital.

Only a few people have ever walked on the Moon, but the benefits of the Apollo program for the rest of us continue to ripple widely.

In the years since, we have continued to create innovations that have saved lives, helped the environment, and advanced all kinds of technology.

Now we’re going forward to the Moon with the Artemis program and on to Mars — and building ever more cutting-edge technologies to get us there. As with the many spinoffs from the Apollo era, these innovations will transform our lives for generations to come.

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com.

Ask Ethan: Why Are There Only Three Generations Of Particles?

“It is eminently possible that there are more particles out there than the Standard Model, as we know it, presently predicts. In fact, given all the components of the Universe that aren’t accounted for in the Standard Model, from dark matter to dark energy to inflation to the origin of the matter-antimatter asymmetry, it’s practically unreasonable to conclude that there aren’t additional particles.

But if the additional particles fit into the structure of the Standard Model as an additional generation, there are tremendous constraints. They could not have been created in great abundance during the early Universe. None of them can be less massive than 45.6 GeV/c^2. And they could not imprint an observable signature on the cosmic microwave background or in the abundance of the light elements.

Experimental results are the way we learn about the Universe, but the way those results fit into our most successful theoretical frameworks is how we conclude what else does and doesn’t exist in our Universe. Unless a future accelerator result surprises us tremendously, three generations is all we get: no more, no less, and nobody knows why.”

There are three generations of (fermionic) particles in the Universe. In addition to the lightest quarks (up and down), the electron and positron, and the electron neutrino and anti-neutrino, there are two extra, heavy “copies” of this structure. The charm-and-strange quarks plus the top-and-bottom quarks fill the remaining generations of quarks, while the muon and muon neutrino and anti-neutrino plus the tau and tau neutrino and anti-neutrino comprise the next generation of leptons.

Theoretically, there’s nothing demanding three and only three generations, but experiments have shown that there are no more to within absurd constraints. Here’s the full story of how we know there are only three generations.

What is Gravitational Lensing?

A gravitational lens is a distribution of matter (such as a cluster of galaxies) between a distant light source and an observer, that is capable of bending the light from the source as the light travels towards the observer. This effect is known as gravitational lensing, and the amount of bending is one of the predictions of Albert Einstein’s general theory of relativity.

This illustration shows how gravitational lensing works. The gravity of a large galaxy cluster is so strong, it bends, brightens and distorts the light of distant galaxies behind it. The scale has been greatly exaggerated; in reality, the distant galaxy is much further away and much smaller. Credit: NASA, ESA, L. Calcada

There are three classes of gravitational lensing:

1° Strong lensing: where there are easily visible distortions such as the formation of Einstein rings, arcs, and multiple images.

Einstein ring. credit: NASA/ESA&Hubble

2° Weak lensing: where the distortions of background sources are much smaller and can only be detected by analyzing large numbers of sources in a statistical way to find coherent distortions of only a few percent. The lensing shows up statistically as a preferred stretching of the background objects perpendicular to the direction to the centre of the lens. By measuring the shapes and orientations of large numbers of distant galaxies, their orientations can be averaged to measure the shear of the lensing field in any region. This, in turn, can be used to reconstruct the mass distribution in the area: in particular, the background distribution of dark matter can be reconstructed. Since galaxies are intrinsically elliptical and the weak gravitational lensing signal is small, a very large number of galaxies must be used in these surveys.

The effects of foreground galaxy cluster mass on background galaxy shapes. The upper left panel shows (projected onto the plane of the sky) the shapes of cluster members (in yellow) and background galaxies (in white), ignoring the effects of weak lensing. The lower right panel shows this same scenario, but includes the effects of lensing. The middle panel shows a 3-d representation of the positions of cluster and source galaxies, relative to the observer. Note that the background galaxies appear stretched tangentially around the cluster.

3° Microlensing: where no distortion in shape can be seen but the amount of light received from a background object changes in time. The lensing object may be stars in the Milky Way in one typical case, with the background source being stars in a remote galaxy, or, in another case, an even more distant quasar. The effect is small, such that (in the case of strong lensing) even a galaxy with a mass more than 100 billion times that of the Sun will produce multiple images separated by only a few arcseconds. Galaxy clusters can produce separations of several arcminutes. In both cases the galaxies and sources are quite distant, many hundreds of megaparsecs away from our Galaxy.

Gravitational lenses act equally on all kinds of electromagnetic radiation, not just visible light. Weak lensing effects are being studied for the cosmic microwave background as well as galaxy surveys. Strong lenses have been observed in radio and x-ray regimes as well. If a strong lens produces multiple images, there will be a relative time delay between two paths: that is, in one image the lensed object will be observed before the other image.

As an exoplanet passes in front of a more distant star, its gravity causes the trajectory of the starlight to bend, and in some cases results in a brief brightening of the background star as seen by a telescope. The artistic concept illustrates this effect. This phenomenon of gravitational microlensing enables scientists to search for exoplanets that are too distant and dark to detect any other way.Credits: NASA Ames/JPL-Caltech/T. Pyle

Explanation in terms of space–time curvature

Simulated gravitational lensing by black hole by: Earther

In general relativity, light follows the curvature of spacetime, hence when light passes around a massive object, it is bent. This means that the light from an object on the other side will be bent towards an observer’s eye, just like an ordinary lens. In General Relativity the speed of light depends on the gravitational potential (aka the metric) and this bending can be viewed as a consequence of the light traveling along a gradient in light speed. Light rays are the boundary between the future, the spacelike, and the past regions. The gravitational attraction can be viewed as the motion of undisturbed objects in a background curved geometry or alternatively as the response of objects to a force in a flat geometry.

A galaxy perfectly aligned with a supernova (supernova PS1-10afx) acts as a cosmic magnifying glass, making it appear 100 billion times more dazzling than our Sun. Image credit: Anupreeta More/Kavli IPMU.

To learn more, click here.

-

fraktally reblogged this · 4 years ago

fraktally reblogged this · 4 years ago -

jarl-tromsoe liked this · 4 years ago

jarl-tromsoe liked this · 4 years ago -

god-the-odd liked this · 4 years ago

god-the-odd liked this · 4 years ago -

kissesofmoon liked this · 4 years ago

kissesofmoon liked this · 4 years ago -

jorgearmandomsdr liked this · 4 years ago

jorgearmandomsdr liked this · 4 years ago -

queenslayerthepoettt liked this · 4 years ago

queenslayerthepoettt liked this · 4 years ago -

reality-is-complex liked this · 4 years ago

reality-is-complex liked this · 4 years ago -

emmanuel027 liked this · 4 years ago

emmanuel027 liked this · 4 years ago -

tamaaweeb liked this · 4 years ago

tamaaweeb liked this · 4 years ago -

tsubamegirl liked this · 4 years ago

tsubamegirl liked this · 4 years ago -

herbysin liked this · 4 years ago

herbysin liked this · 4 years ago -

freespirit-78 liked this · 4 years ago

freespirit-78 liked this · 4 years ago -

coconuts-coco reblogged this · 4 years ago

coconuts-coco reblogged this · 4 years ago -

coconuts-coco liked this · 4 years ago

coconuts-coco liked this · 4 years ago -

1bottleofwhiskey liked this · 4 years ago

1bottleofwhiskey liked this · 4 years ago -

skylobster reblogged this · 4 years ago

skylobster reblogged this · 4 years ago -

mirrorik liked this · 4 years ago

mirrorik liked this · 4 years ago -

aboutanancientenquiry liked this · 4 years ago

aboutanancientenquiry liked this · 4 years ago -

birdflu2k11 liked this · 4 years ago

birdflu2k11 liked this · 4 years ago -

amarac007-blog liked this · 4 years ago

amarac007-blog liked this · 4 years ago -

none-of-your-businesse liked this · 4 years ago

none-of-your-businesse liked this · 4 years ago -

heathgallerysandiego liked this · 4 years ago

heathgallerysandiego liked this · 4 years ago -

elenadavidsoare liked this · 4 years ago

elenadavidsoare liked this · 4 years ago -

theprominens liked this · 4 years ago

theprominens liked this · 4 years ago -

blue-sun-king liked this · 4 years ago

blue-sun-king liked this · 4 years ago -

sorinkavglazy reblogged this · 4 years ago

sorinkavglazy reblogged this · 4 years ago -

sorinkavglazy liked this · 4 years ago

sorinkavglazy liked this · 4 years ago -

cripple-cat liked this · 4 years ago

cripple-cat liked this · 4 years ago -

neysastudies reblogged this · 4 years ago

neysastudies reblogged this · 4 years ago -

aurorawhite-1 liked this · 4 years ago

aurorawhite-1 liked this · 4 years ago -

rbrooksdesign liked this · 4 years ago

rbrooksdesign liked this · 4 years ago -

sat-85 liked this · 4 years ago

sat-85 liked this · 4 years ago -

femtopulsed liked this · 4 years ago

femtopulsed liked this · 4 years ago -

marklakshmanan reblogged this · 4 years ago

marklakshmanan reblogged this · 4 years ago -

katoprofen liked this · 4 years ago

katoprofen liked this · 4 years ago -

autodidactadelavida reblogged this · 4 years ago

autodidactadelavida reblogged this · 4 years ago -

autodidactadelavida liked this · 4 years ago

autodidactadelavida liked this · 4 years ago -

a-golden-bear liked this · 4 years ago

a-golden-bear liked this · 4 years ago -

dredpiratelou liked this · 4 years ago

dredpiratelou liked this · 4 years ago -

atwubis reblogged this · 4 years ago

atwubis reblogged this · 4 years ago -

atwubis liked this · 4 years ago

atwubis liked this · 4 years ago -

intelligentliving reblogged this · 4 years ago

intelligentliving reblogged this · 4 years ago -

dean-arch reblogged this · 4 years ago

dean-arch reblogged this · 4 years ago -

dean-arch liked this · 4 years ago

dean-arch liked this · 4 years ago -

joelpersels liked this · 4 years ago

joelpersels liked this · 4 years ago -

blog-dzepxich liked this · 4 years ago

blog-dzepxich liked this · 4 years ago