New Supernova Results: Is The Universe Not Accelerating?

New Supernova Results: Is The Universe Not Accelerating?

“There actually is a nice result from this paper: it perhaps will cause a rethink of the standard likelihood analysis used by teams analyzing supernova data. It also shows just how incredible our data is: even with using none of our knowledge about the matter in the Universe or the flatness of space, we can still arrive at a better-than-3σ result supporting an accelerating Universe. But it also underscores something else that’s far more important. Even if all of the supernova data were thrown out and ignored, we have more than enough evidence at present to be extremely confident that the Universe is accelerating, and made of about 2/3 dark energy.”

Just a few days ago, a new paper was published in the journal Scientific Reports claiming that the evidence for acceleration from Type Ia supernovae was much flimsier than anyone gave it credit for. Rather than living up to the 5-sigma standard for scientific discovery, the authors claimed that there was only marginal, 3-sigma evidence for any sort of acceleration, despite having statistics that were ten times better than the original 1998 announcement. They claimed that an improved likelihood analysis combined with a rejection of all other priors explains why they obtained this result, and use it to cast doubt on not only the concordance model of cosmology, but on the awarding of the 2011 Nobel Prize for dark energy. Despite the sensational coverage this has gotten in the press, the team does quite a few things that are a tremendous disservice to the good science that has been done, and even a simplistic analysis clearly debunks their conclusions.

Dark energy and acceleration are real and here to stay. You owe it to yourself to find out why and how!

More Posts from Evisno and Others

Cosmic horseshoe is not the lucky beacon

A UC Riverside-led team of astronomers use observations of a gravitationally lensed galaxy to measure the properties of the early universe

Although the universe started out with a bang it quickly evolved to a relatively cool, dark place. After a few hundred thousand years the lights came back on and scientists are still trying to figure out why.

Astronomers know that reionization made the universe transparent by allowing light from distant galaxies to travel almost freely through the cosmos to reach us.

However, astronomers don’t fully understand the escape rate of ionizing photons from early galaxies. That escape rate is a crucial, but still a poorly constrained value, meaning there are a wide range of upper and lower limits in the models developed by astronomers.

That limitation is in part due to the fact that astronomers have been limited to indirect methods of observation of ionizing photons, meaning they may only see a few pixels of the object and then make assumptions about unseen aspects. Direct detection, or directly observing an object such as a galaxy with a telescope, would provide a much better estimate of their escape rate.

In a just-published paper, a team of researchers, led by a University of California, Riverside graduate student, used a direct detection method and found the previously used constraints have been overestimated by five times.

“This finding opens questions on whether galaxies alone are responsible for the reionization of the universe or if faint dwarf galaxies beyond our current detection limits have higher escape fractions to explain radiation budget necessary for the reionization of the universe,” said Kaveh Vasei, the graduate student who is the lead author of the study.

It is difficult to understand the properties of the early universe in large part because this was more than 12 billion year ago. It is known that around 380,000 years after the Big Bang, electrons and protons bound together to form hydrogen atoms for the first time. They make up more than 90 percent of the atoms in the universe, and can very efficiently absorb high energy photons and become ionized.

However, there were very few sources to ionize these atoms in the early universe. One billion years after the Big Bang, the material between the galaxies was reionized and became more transparent. The main energy source of the reionization is widely believed to be massive stars formed within early galaxies. These stars had a short lifespan and were usually born in the midst of dense gas clouds, which made it very hard for ionizing photons to escape their host galaxies.

Previous studies suggested that about 20 percent of these ionizing photons need to escape the dense gas environment of their host galaxies to significantly contribute to the reionization of the material between galaxies.

Unfortunately, a direct detection of these ionizing photons is very challenging and previous efforts have not been very successful. Therefore, the mechanisms leading to their escape are poorly understood.

This has led many astrophysicists to use indirect methods to estimate the fraction of ionizing photons that escape the galaxies. In one popular method, the gas is assumed to have a “picket fence” distribution, where the space within galaxies is assumed to be composed of either regions of very little gas, which are transparent to ionizing light, or regions of dense gas, which are opaque. Researchers can determine the fraction of each of these regions by studying the light (spectra) emerging from the galaxies.

In this new UC Riverside-led study, astronomers directly measured the fraction of ionizing photons escaping from the Cosmic Horseshoe, a distant galaxy that is gravitationally lensed. Gravitational lensing is the deformation and amplification of a background object by the curving of space and time due to the mass of a foreground galaxy. The details of the galaxy in the background are therefore magnified, allowing researchers to study its light and physical properties more clearly.

Based on the picket fence model, an escape fraction of 40 percent for ionizing photons from the Horseshoe was expected. Therefore, the Horseshoe represented an ideal opportunity to get for the first time a clear, resolved image of leaking ionizing photons to help understand the mechanisms by which they escape their host galaxies.

The research team obtained a deep image of the Horseshoe with the Hubble Space Telescope in an ultraviolet filter, enabling them to directly detect escaping ionizing photons. Surprisingly, the image did not detect ionizing photons coming from the Horseshoe. This team constrained the fraction of escaping photons to be less than 8 percent, five times smaller than what had been inferred by indirect methods widely used by astronomers.

“The study concludes that the previously determined fraction of escaping ionizing radiation of galaxies, as estimated by the most popular indirect method, is likely overestimated in many galaxies,” said Brian Siana, co-author of the research paper and an assistant professor at UC Riverside.

“The team is now focusing on direct determination the fraction of escaping ionizing photons that do not rely on indirect estimates.”

Comments of the Week #92: from the Universe’s birth to ten decades of science

“[I]f there were antimatter galaxies out there, then there should be some interface between the matter and antimatter ones. Either there would be a discontinuity (like a domain wall) separating the two regions, there would be an interface where gamma rays of a specific frequency originated, or there would be a great 2D void where it’s all already annihilated away.

And our Universe contains none of these things. The absence of them in all directions and in all locations tells us that if there are antimatter galaxies out there, they’re far beyond the observable part of our Universe. Instead, every interacting pair we see shows evidence that they’re all made of matter. Beautiful, beautiful matter.”

There’s no better way to start 2016 than… with a bang! Come check out our first comments of the week of the new year.

Ask Ethan: Could The Fabric Of Spacetime Be Defective?

“The topic I’d like to suggest is high-energy relics, like domain walls, cosmic strings, monopoles, etc… it would be great to read more about what these defects really are, what their origin is, what properties they likely have, or, and this is probably the most exciting part for me, how we expect them to look like and interact with the ‘ordinary’ universe.”

So, you’d like to ruin the fabric of your space, would you? Similar to tying a knot in it, stitching it up with some poorly-run shenanigans, running a two-dimensional membrane through it (like a hole in a sponge), etc., it’s possible to put a topological defect in the fabric of space itself. This isn’t just a mathematical possibility, but a physical one: if you break a symmetry in just the right way, monopoles, strings, domain walls, or textures could be produced on a cosmic scale. These could show up in a variety of ways, from abundant new, massive particles to a network of large-scale structure defects in space to a particular set of fluctuations in the cosmic microwave background. Yet when it comes time to put up or shut up, the Universe offers no positive evidence of any of these defects. Save for one, that is: back in 1982, there was an observation of one (and only one) event consistent with a magnetic monopole. 35 years later, we still don’t know what it was.

It’s time to investigate the possibilities, no matter how outlandish they seem, on this week’s Ask Ethan!

death of a star by a supernova explosion,

and the birth of a black hole

The making of Polylion

A little more help for focusing-on beginers: In terms of cognitive demand, it is more difficult to focusing on your ongoing task when you have a long to-do list than when only a few more tasks left. So I recommend you to try making schedule with less than 5 tasks a day. It will be much easier to organize work of different fields or of different shades of cognitive demand.

How focusing (aka. not multi-tasking) changed my study life

I had heard it occasionally - that multi-tasking was actually not good for the quality of whatever task I was doing. It made sense, but I loved mult-tasking so much. It gave me the illusion of productivity.

Until I actually tried focusing for a while, did I realise how much I was actually losing by multi-tasking - educationally and emotionally. Scrolling through tumblr during boring parts of a lecture seemed fine, since there were notes and it probably wouldn’t be tested in such depth anyway. Eating, while scrolling through social media, while watching a tv show, while messaging someone on facebook seemed ‘productive’.

It turns out it was the opposite. It may seem fine, and at times it may actually be okay, but what matters is the principle. Dedicating your whole being to one task, focusing on it, produces much better results. It’s a quality over quantity thing. It also helped to calm me down emotionally - I used to always feel rushed, like there was so many things to do but not enough time to do them. Focusing on one task at a time - though it was hard at first - helped slow me down because I did everything properly, and didn’t have the feeling like I needed to go back and do things over again.

Focusing on one thing wholly is also a form of practising mindfulness. Mindfulness ‘meditation’ isn’t something that requires you to sit down and meditate - it can be applied to our daily life.

Since I started practising this mindful skill of focus, I’ve become much calmer, it’s been so much easier to stay on top of my work load and meet deadlines, I don’t feel rushed, I don’t feel unprepared or unorganised, and I do more quality work than when I used to multi-task.

There are times for multi-tasking and times for focus. Find the right balance and enjoy the task in front of you.

Solar System: Things to Know This Week

There’s even more to Mars.

1. Batten Down the Hatches

Good news for future astronauts: scientists are closer to being able to predict when global dust storms will strike the Red Planet. The winds there don’t carry nearly the same force that was shown in the movie “The Martian,” but the dust lofted by storms can still wreak havoc on people and machines, as well as reduce available solar energy. Recent studies indicate a big storm may be brewing during the next few months.

+ Get the full forecast

2. Where No Rover Has Gone Before

Our Opportunity Mars rover will drive down an ancient gully that may have been carved by liquid water. Several spacecraft at Mars have observed such channels from a distance, but this will be the first up-close exploration. Opportunity will also, for the first time, enter the interior of Endeavour Crater, where it has worked for the last five years. All this is part of a two-year extended mission that began Oct. 1, the latest in a series of extensions going back to the end of Opportunity’s prime mission in April 2004. Opportunity landed on Mars in January of that year, on a mission planned to last 90 Martian days (92.4 Earth days). More than 12 Earth years later, it’s still rolling.

+ Follow along + See other recent pictures from Endeavour Crater

3. An Uphill Climb

Opportunity isn’t the only NASA Mars rover getting a mission extension. On the other side of the planet, the Curiosity rover is driving and collecting samples amid some of the most scenic landscapes ever visited on Mars. Curiosity’s two-year mission extension also began Oct. 1. It’s driving toward uphill destinations, including a ridge capped with material rich in the iron-oxide mineral hematite, about a mile-and-a-half (two-and-a-half kilometers) ahead. Beyond that, there’s an exposure of clay-rich bedrock. These are key exploration sites on lower Mount Sharp, which is a layered, Mount-Rainier-size mound where Curiosity is investigating evidence of ancient, water-rich environments that contrast with the harsh, dry conditions on the surface of Mars today.

+ Learn more

4. Keep a Sharp Lookout

Meanwhile, the Mars Reconnaissance Orbiter continues its watch on the Red Planet from above. The mission team has just released a massive new collection of super-high-resolution images of the Martian surface.

+ Take a look

5. 20/20 Vision for the 2020 Rover

In the year 2020, Opportunity and Curiosity will be joined by a new mobile laboratory on Mars. In the past week, we tested new “eyes” for that mission. The Mars 2020 rover’s Lander Vision System helped guide the rocket to a precise landing at a predesignated target. The system can direct the craft toward a safe landing at its primary target site or divert touchdown toward better terrain if there are hazards in the approaching target area.

+ Get details

Discover the full list of 10 things to know about our solar system this week HERE.

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com

‘아렌델’은 빅뱅 이후 첫 10억년 이내에 존재했던 별입니다. 우리 지구에 닿기까지 129억년이나 걸린 것이지요!

우리 태양보다 적어도 50배 크고, 몇백만 배 밝아요. 평소에는 지구에서 볼 수 없지만, 우리와 ‘아렌델’ 사이에 있는 은하단이 렌즈 역할을 했습니다!

A View into the Past

Our Hubble Space Telescope just found the farthest individual star ever seen to date!

Nicknamed “Earendel” (“morning star” in Old English), this star existed within the first billion years after the universe’s birth in the big bang. Earendel is so far away from Earth that its light has taken 12.9 billion years to reach us, far eclipsing the previous single-star record holder whose light took 9 billion years to reach us.

Though Earendel is at least 50 times the mass of our Sun and millions of times as bright, we’d normally be unable to see it from Earth. However, the mass of a huge galaxy cluster between us and Earendel has created a powerful natural magnifying glass. Astronomers expect that the star will be highly magnified for years.

Earendel will be observed by NASA’s James Webb Space Telescope. Webb's high sensitivity to infrared light is needed to learn more about this star, because its light is stretched to longer infrared wavelengths due to the universe's expansion.

We’re Way Below Average! Astronomers Say Milky Way Resides In A Great Cosmic Void

“If there weren’t a large cosmic void that our Milky Way resided in, this tension between different ways of measuring the Hubble expansion rate would pose a big problem. Either there would be a systematic error affecting one of the methods of measuring it, or the Universe’s dark energy properties could be changing with time. But right now, all signs are pointing to a simple cosmic explanation that would resolve it all: we’re simply a bit below average when it comes to density.”

When you think of the Universe on the largest scales, you likely think of galaxies grouped and clustered together in huge, massive collections, separated by enormous cosmic voids. But there’s another kind of cluster-and-void out there: a very large volume of space that has its own galaxies, clusters and voids, but is simply higher or lower in density than average. If our galaxy resided near the center of one such region, we’d measure the expansion rate of the Universe to be higher-or-lower than average when we used nearby techniques. But if we measured the global expansion rate, such as via baryon acoustic oscillations or the fluctuations in the cosmic microwave background, we’d actually arrive at the true, average rate.

We’ve been seeing an important discrepancy for years, and yet the cause might simply be that the Milky Way lives in a large cosmic void. The data supports it, too! Get the story today.

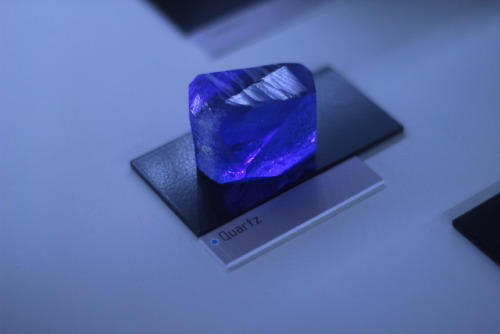

I visited Nantes’ Natural History Museum.

-

sciencestuff1442 reblogged this · 8 years ago

sciencestuff1442 reblogged this · 8 years ago -

miresgaleth liked this · 8 years ago

miresgaleth liked this · 8 years ago -

knowledgeistreasure reblogged this · 8 years ago

knowledgeistreasure reblogged this · 8 years ago -

zainalsy liked this · 8 years ago

zainalsy liked this · 8 years ago -

lilabatizi-blog reblogged this · 8 years ago

lilabatizi-blog reblogged this · 8 years ago -

lilabatizi-blog liked this · 8 years ago

lilabatizi-blog liked this · 8 years ago -

evisno reblogged this · 8 years ago

evisno reblogged this · 8 years ago -

importantkryptonitemagazine liked this · 8 years ago

importantkryptonitemagazine liked this · 8 years ago -

bibliophiliosaurus reblogged this · 8 years ago

bibliophiliosaurus reblogged this · 8 years ago -

saerans-broken-computer liked this · 8 years ago

saerans-broken-computer liked this · 8 years ago -

youre-gonna-be-the-death-of-me reblogged this · 8 years ago

youre-gonna-be-the-death-of-me reblogged this · 8 years ago -

youre-gonna-be-the-death-of-me liked this · 8 years ago

youre-gonna-be-the-death-of-me liked this · 8 years ago -

spacetimewithstuartgary reblogged this · 8 years ago

spacetimewithstuartgary reblogged this · 8 years ago -

transsimian liked this · 8 years ago

transsimian liked this · 8 years ago -

samarafield liked this · 8 years ago

samarafield liked this · 8 years ago -

procrastinatingdraggun liked this · 8 years ago

procrastinatingdraggun liked this · 8 years ago -

mistertotality reblogged this · 8 years ago

mistertotality reblogged this · 8 years ago -

uzayboslugundabirpenguen liked this · 8 years ago

uzayboslugundabirpenguen liked this · 8 years ago -

startswithabang reblogged this · 8 years ago

startswithabang reblogged this · 8 years ago